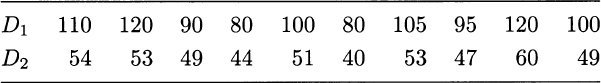

So far, when dealing with a sequence of random variables, we always assumed that they were independent. At last, we investigate the issue of dependence. To get the basic intuition, consider the hypothetical demand data in Table 8.1.

Table 8.1 Demand data for two items: Are they independent?

Can we say that the two random variables D1 and D2 are independent? Looking at the raw data may be a bit confusing, but things get definitely clearer if we consider the sample means, ![]() and

and ![]() . We observe that whenever D1 is above average, D2 tends to be, too; vice versa, whenever D1 is below average, D2 tends to be, too. Hence, we have a sort of concordance between the two random variables, which should not be there if the two variables were independent. Capturing this concordance leads us to the definition covariance and correlation.

. We observe that whenever D1 is above average, D2 tends to be, too; vice versa, whenever D1 is below average, D2 tends to be, too. Hence, we have a sort of concordance between the two random variables, which should not be there if the two variables were independent. Capturing this concordance leads us to the definition covariance and correlation.

Before doing so, we introduce the formal concepts of joint and marginal distributions in Section 8.1. The mathematics here is a bit more complicated than elsewhere, and the section can be skipped by those who just wish an intuitive understanding. In Section 8.2, we summarize properties of independent random variables. Then, in Section 8.3, we characterize the interdependence between two random variables in terms of covariance and correlation. We illustrate the role of these concepts in risk management, and we also point out their limitations. In the book, we will not cover multivariate distributions too much, as this is a topic that goes beyond an introductory text, but in Section 8.4 we treat one fundamental case: the multivariate normal distribution. Finally, in Section 8.5, we take advantage of the possible links between random variables to compute expectations by conditioning. Indeed, conditional expectation is an essential concept in many applications.

Leave a Reply