Tools take over repetitious testing tasks

People who refer to “test tools” are often actually referring to the tools used to automate dynamic testing. These are tools that relieve testers of repetitious “mechanical” testing tasks such as providing a test object with test data, recording the test object’s reactions, and logging the test process.

Probe effects

In most cases, these tools run on the same hardware as the test object, what can definitely influence the test object’s behavior. For example, the actual response times of the tested application or the overall memory usage may be different when executing the test object and the test tool in parallel, and therefore the test results may differ too. Accordingly, you need to take such interactions—often called “probe effects”—into account when evaluating your test results. Because they are connected to the test object’s test interface, the nature of these types of tools varies a lot depending on the test level they are used on.

Unit test frameworks

Tools that are designed to address test objects via their APIs are called unit test frameworks. They are used mainly for component and integration testing or for a number of special system testing tasks. A unit test framework is usually tailored to a specific programming language.

JUnit is a good example of a unit test framework for use with the Java programming language, and many others are available online ([URL: xUnit]). Unit test frameworks are also the foundation of test-driven development.

System testing using test robots

If the test object’s user interface serves as the test interface, you can use so-called test robots. Which are also known as “capture and replay” tools (for obvious reasons!). During a session, the tool captures all manual user operations (keystrokes and mouse actions) that the tester performs and saves them in a script.

Running the script enables you to automatically repeat the same test as often as you like. This principle appears simple and extremely useful but does have some drawback.

How Capture/Replay Tools Work

Capture

In capture mode, the tool records all keystrokes and mouse actions, including the position and the operations it sets in motion (such as clicked buttons). It also records the attributes required to identify the object (name, type, color, label, x/y coordinates, and so on).

Expected/actual comparison

To check whether the program behaves correctly, you can record expected/actual behavior comparisons, either during capture or later during script post-processing. This enables you to verify the test object’s functional characteristics (such as field values or the contents of a pop-up message) and also the layout-related characteristics of graphical elements (such as the color, position, or size of a button).

Replay

The resulting script can then be replayed as often as you like. If the values diverge during an expected/actual comparison, the test fails and the robot logs a corresponding message. This ability to automatically compare expected and actual behaviors makes capture/replay tools ideal for automating regression testing.

One common drawback occurs if extensions or alterations to the program alter the test object’s GUI between tests. In this case, an older script will no longer match the current version of the GUI and may halt or abort unexpectedly. Today’s capture/replay tools usually use attributes rather than x/y coordinates to identify objects, so they are quite good at recognizing objects in the GUI, even if buttons have been moved between versions. This capability is called “GUI object mapping” or “smart imaging technology” and test tool manufacturers are constantly improving it.

Test programming

Capture/replay scripts are usually recorded using scripting languages similar to or based on common programming languages (such as Java), and offer the same building blocks (statements, loops, procedures, and so on). This makes it possible to implement quite complex test sequences by coding new scripts or editing scripts you have already recorded. You will usually have to edit your scripts anyway, as even the most sophisticated GUI object mapping functionality rarely delivers a regression test-capable script at the first attempt. The following example illustrates the situation:

Case Study: Automated testing for the VSR-II ContractBase module

The tests for the VSR-II ContractBase module are designed to check whether vehicle purchase contracts are correctly saved and also retrievable. In the course of test automation, the tester records the following operating sequence:

Switch to the “contract data” form;

Enter customer data for person “Smith”;

Set a checkpoint;

Save the “Smith” contract in the database;

Leave the “contract data” form;

Reenter the form and load the “Smith”

contract from the database;

Check the contents of the form against the checkpoint;

If the comparison delivers a match, we can assume that the system saves contracts correctly. However, the script stalls when the tester re-runs it. So what is the problem?

Is the script regression test-ready?

During the second run, the script stalls because the contract has already been saved in the database. A second attempt to save it then produces the following message:

“Contract already saved.

Overwrite: Yes/No?”

The test object then waits for keyboard input but, because the script doesn’t contain such a keystroke, the script halts.

The two test runs have different preconditions. The script assumes that the contract has not yet been saved to the database, so the captured script is not regression test-capable. To work around this issue, we either have to program multiple cases to cover the different states or simply delete the contract as a “cleanup” action for this particular test case.

The example illustrates a good example of the necessity to review and edit a script. You therefore need programming skills to create this kind of automated test. Furthermore, if you aim to produce automation solutions with a long lifecycle, you also need to use a suitable modular architecture for your test scripts.

Test automation architectures

Using a predefined structure for your scripts helps to save time and effort when it comes to automating and maintaining individual tests. A well-structured automation architecture also helps to divide the work reasonably between test automators and test specialists.

You will often find that a particular script is repeated regularly using varying test data sets. For instance, in the example above, the test needs to be run for other customers too, not just Ms. Smith.

Data-driven test automation

One obvious way to produce a clear structure and reduce effort is to separate the test data from the scripts. Test data are usually saved in a spreadsheet that includes the expected results. The script reads a data set, runs the test, and repeats the cycle for each subsequent data set. If you require additional test cases, all you have to do is add a line to the spreadsheet while the script remains unchanged. Using this method—called “data-driven testing”—even testers with no programming skills can add and maintain test cases.

Comprehensive test automation often requires certain test procedures to be repeated multiple times. For example, if the ContractBase component needs to be tested for pre-owned vehicle purchases as well as new vehicle purchases, it would be ideal if the same script can be used for both scenarios. Such re-use is possible if you encapsulate the appropriate steps in a procedure (in our case, called something like check_contract(customer)). You can then call and re-use the procedure from within as many test sequences as you like.

Keyword-driven test automation

If you apply an appropriate degree of granularity and choose your procedure names carefully, the set of names or keywords you choose reflect the actions and objects from your application domain (in our case, actions and objects such as select(customer), save(contract), or submit_order(vehicle)). This methodology is known as command-driven or keyword-driven testing.

As with data-driven testing, the test cases composed from the keywords and the test data are often saved in spreadsheets, so test specialists who have no programming skills can easily work on them.

To make such tests executable by a test automation robot, each keyword also has to be implemented by a corresponding script using the test robot’s programming language. This type of programming is best performed by experienced testers, developers, or test automation specialists.

Keyword-driven testing isn’t easily scalable, and long lists of keywords and complex test sequences quickly make the spreadsheet tables unwieldy. Dependencies between individual actions or actions and their parameters, are difficult to track and the effort involved in maintaining the tables quickly becomes disproportionate to the benefits they offer.

Side Note: tools supporting data-driven and keyword-driven testing

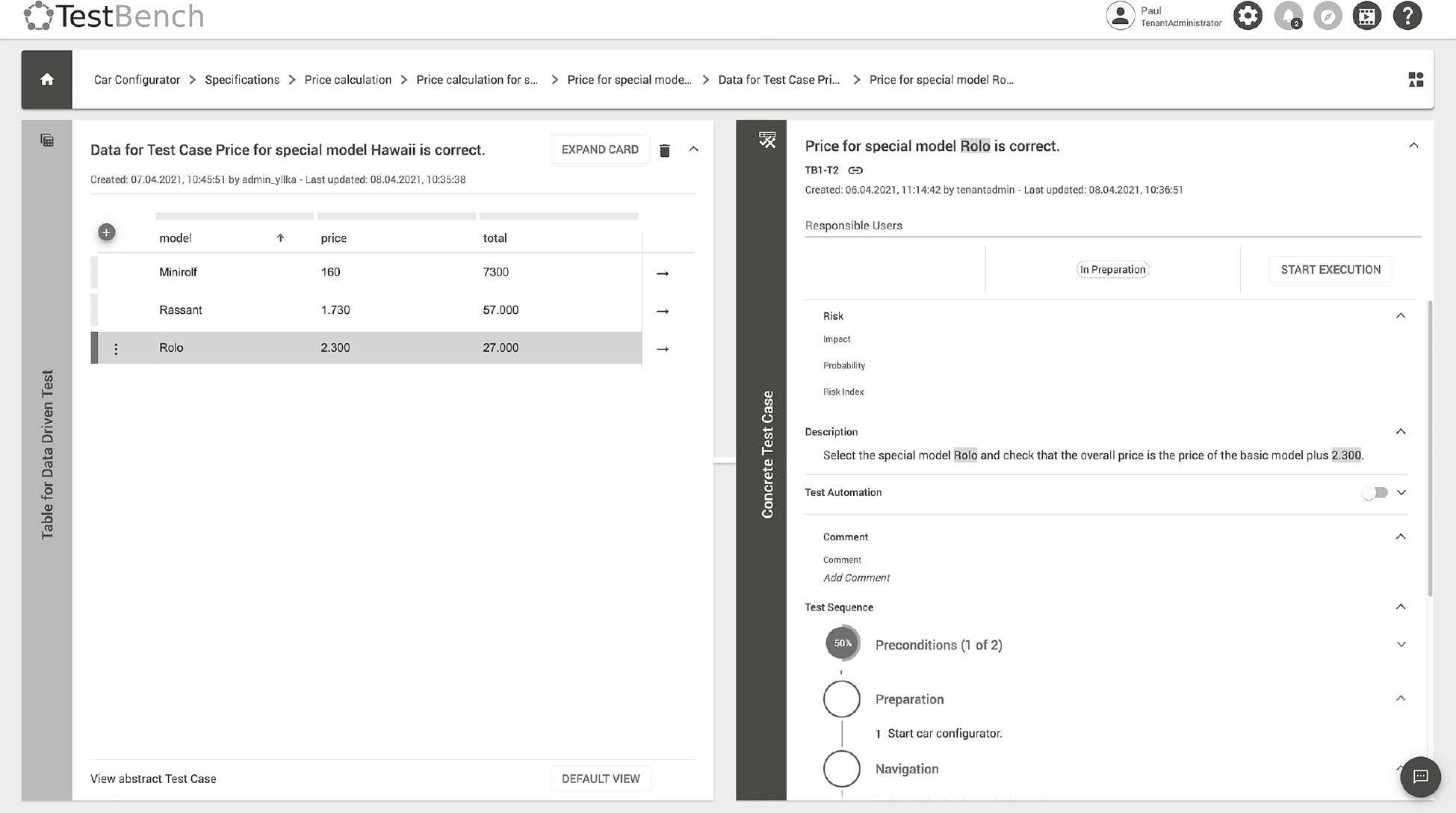

Advanced test management tools offer database-supported data-driven and keyword-driven testing. For example, test case definitions can include test data variables, while the corresponding test data values are stored and managed (as test data rows) within the tool’s database. During test execution the tool automatically substitutes the test data variables with concrete test data values. In a similar way, keywords can be dragged and dropped from the tool’s keyword repository to create new test sequences, and the corresponding test data are loaded automatically, regardless of their complexity. If a keyword is altered, all affected test cases are identified by the tool, which significantly reduces the effort involved in test maintenance.

Fig. 7-1Data-driven testing using TestBench [URL: TestBench CS]

Comparators

Comparators are tools that compare expected and actual test results. They can process commonly used file and database formats and are designed to identify discrepancies between expected and actual data. Test robots usually have built-in comparison functionality that works with console content, GUI objects, or copies of screen contents. Such tools usually have filter functionality that enables you to bypass data that isn’t relevant to the current comparison. This is necessary, for example, if the file or screen content being tested includes date or time data, as these vary from test run to test run. Comparing differing timestamps would, of course, raise an unwanted flag.

Dynamic analysis

Dynamic analysis tools provide additional information on the software’s internal state during dynamic testing—for example, memory allocation, memory leaks, pointer assignment, pointer arithmetic4, and so on).

Coverage analysis

Coverage (or “code coverage”) tools provide metrics relating to structural coverage during testing. To do this, a special “instrumentation” component in the code coverage tool inserts measuring instructions into the test object before testing begins. If an instruction is triggered during testing, the corresponding place in the code is marked as “covered”. Once testing is completed, the log is analyzed to provide overall coverage statistics. Most coverage tools provide simple coverage metrics, such as statement or decision coverage. When interpreting coverage data, it is important to remember that different tools deliver differing results, or that a single metric can be defined differently from tool to tool.

Debuggers

Although they are not strictly test tools, debuggers enable you to run through a program line by line, halt execution wherever you want, and set and read variables at will.

Debuggers are primarily analysis tools used by developers to reproduce failures and analyze their causes. Debuggers can be useful for forcing specific test situations that would otherwise be too complicated to reproduce. They can also be used as interfaces for component testing.

Leave a Reply